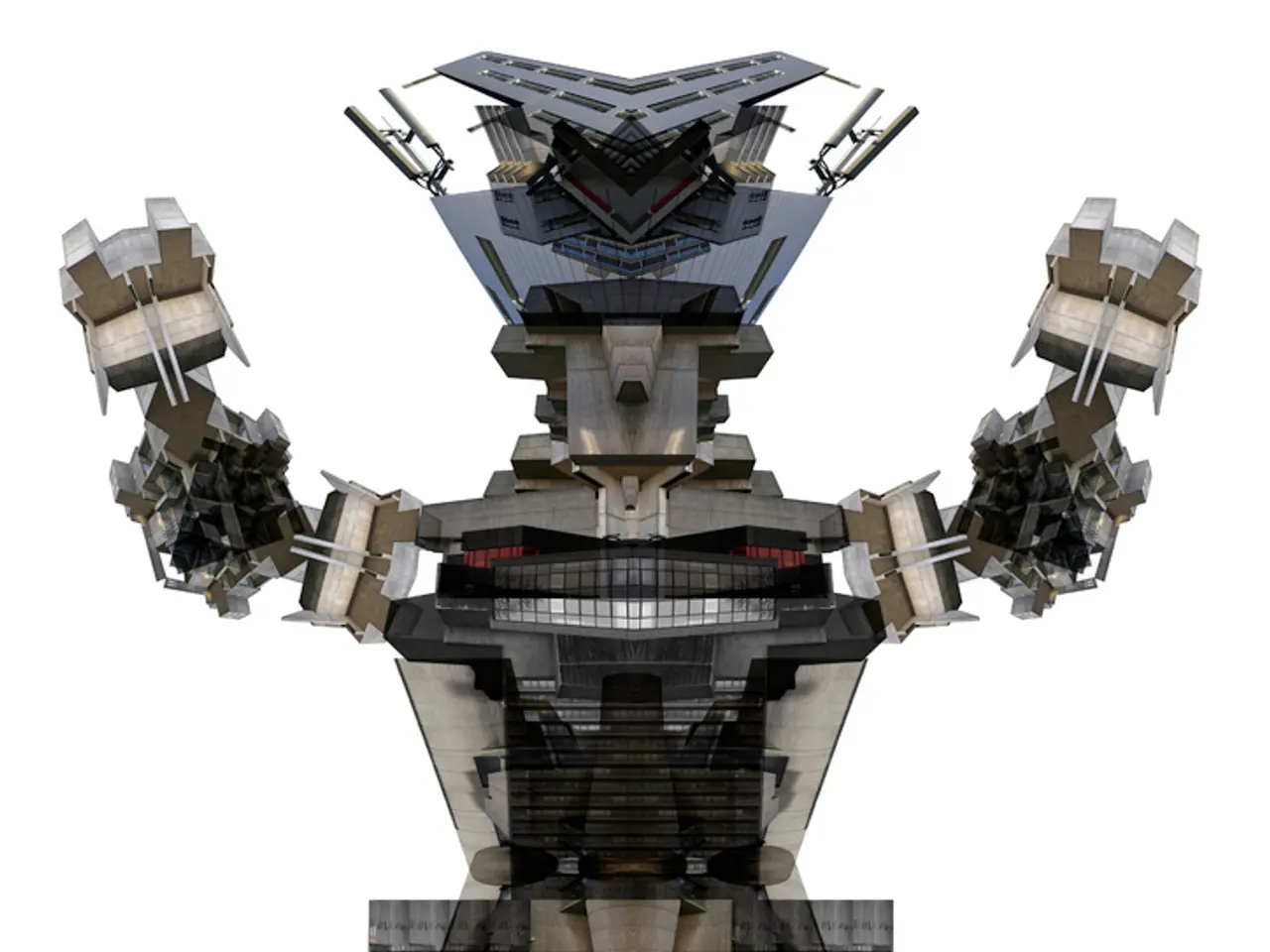

Understanding one's own anatomy: Vision-based system develops machines' capability to comprehend their physical structures

Revolutionary Neural Jacobian Fields (NJF) Make Robotics More Accessible

In a groundbreaking development, researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have introduced Neural Jacobian Fields (NJF), a system that empowers robots to learn their own internal models through deep neural networks [1][2][4]. This innovative approach could make robotics more affordable, adaptable, and accessible to a wider audience.

The NJF system, published in Nature on June 25, works by inferring a dense visuomotor Jacobian field directly from video observations, typically from a single camera [1][2][4]. This field encodes how every 3D point on a robot's visible surface responds to small changes in its actuators or motor commands, effectively mapping motor inputs to resulting motions across the whole robot's shape.

How NJF Enables Learning an Internal Model

NJF's observation-based learning method uses video input to observe the robot's current configuration and the outcome of motor commands. Deep neural networks are then trained to predict the Jacobian, which relates actuator changes to spatial motion of all visible points [1]. The implicit modeling approach means the robot learns the sensitivity of its body points to commands through experience, without the need for explicit geometric or physical manufacturing models [1].

As the model is learned from ongoing observation, it can accommodate changes in robot morphology, materials, and configurations without reprogramming [1][4]. This adaptability sets NJF apart from traditional robotics models that require explicit hand-designed kinematic or dynamic equations.

Potential Applications of NJF in Robotics

The versatility of NJF opens up numerous possibilities in robotics. For instance, it enables precise control of complex robots like soft, compliant structures where traditional explicit models fail or are too complex [1]. Moreover, robots can "understand their bodies" and self-calibrate using only one camera sensor, reducing sensor hardware needs dramatically [2][4].

NJF decouples hardware design from control modeling, potentially enabling more bio-inspired and creative robot morphologies without the overhead of engineering their dynamics explicitly [1]. This design flexibility could lead to advanced manipulation and locomotion, as precise control of motor-to-motion relationships at every visible point aids sophisticated tasks or locomotion on unconventional platforms.

Furthermore, the system can adapt to damage, wear, or physical changes in robot structure on the fly, improving robustness and autonomy [1][2]. This robust adaptation could be crucial for robots operating in messy, unstructured environments without expensive infrastructure.

Looking Ahead

The researchers behind NJF are exploring ways to improve generalization, handle occlusions, and extend the model's ability to reason over longer spatial and temporal horizons [3]. The system doesn't yet generalize across different robots and lacks force or tactile sensing, limiting its effectiveness on contact-rich tasks. However, the potential for NJF in expanding the design space for robotics, particularly for soft and bio-inspired robots, is undeniable.

The paper on NJF brought together computer vision and self-supervised learning work from the Sitzmann lab and the expertise in soft robots from the Rus lab [2]. As research continues, we can expect to see NJF making significant strides in the field of robotics, democratizing the technology and paving the way for a new era of adaptable and accessible robots.

The cited content comes primarily from recent 2025 research by MIT CSAIL and related documentation, including an in-depth YouTube explainer on the visuomotor Jacobian field concept [1][2][4].

- The research was supported by the Solomon Buchsbaum Research Fund, an MIT Presidential Fellowship, the National Science Foundation, and the Gwangju Institute of Science and Technology.

- NJF allows robots to learn the mapping of specific points deforming or shifting in response to action, building a dense map of controllability.

- The recently introduced Neural Jacobian Fields (NJF) system, published by MIT's Computer Science and Artificial Intelligence Laboratory, empowers robots to learn their internal models through deep neural networks, making robotics more accessible.

- The NJF system infers a dense visuomotor Jacobian field directly from video observations, encoding how every 3D point on a robot's visible surface responds to small changes in its actuators or motor commands.

- The observation-based learning method of NJF uses video input to observe the robot's current configuration and the outcome of motor commands, enabling it to predict the Jacobian that relates actuator changes to spatial motion of all visible points.

- As the model is learned from ongoing observation, it can accommodate changes in robot morphology, materials, and configurations without reprogramming, setting NJF apart from traditional robotics models.

- The versatility of NJF opens up numerous possibilities in robotics, such as enabling precise control of complex robots like soft, compliant structures and reducing sensor hardware needs by allowing robots to "understand their bodies" and self-calibrate using only one camera sensor.

- Furthermore, the system can adapt to damage, wear, or physical changes in robot structure on the fly, improving robustness and autonomy, particularly for robots operating in messy, unstructured environments.

- The potential for NJF in expanding the design space for robotics, particularly for soft and bio-inspired robots, is undeniable, as it decouples hardware design from control modeling, allowing for more bio-inspired and creative robot morphologies.

- Researchers behind NJF are currently working on improving generalization, handling occlusions, and extending the model's ability to reason over longer spatial and temporal horizons, with the goal of democratizing the technology and paving the way for a new era of adaptable and accessible robots.