Tesla's "Vision" Assist System Struggles in Parking Compared to Competitors

Tesla's Parking Assistance System Prone to Frequent Errors

Tesla's camera-based "Vision" assist system faces more errors than competitors, according to numerous expert reports. Unlike many competitors who use sensors, Tesla relies on cameras, a decision by Elon Musk. These reports, commissioned by German courts, were obtained by "Der Spiegel." Tesla did not respond to questions from the magazine regarding the findings.

In testing, the "Vision" system in Tesla models often fails to detect simple obstacles like a cardboard box or a bike rack. Warnings about potential hazards appear and disappear arbitrarily. Objects can be displayed as an undefined cloud by the camera, disappearing or not being recognized at all—such as a child sitting in front of the car.

A report focusing on the Model Y, Tesla's bestseller, compares it to one that still has ultrasonic sensors. The expert concludes that "Tesla Vision is not equivalent in function." Another report compared two Tesla Model 3s — one with ultrasonic sensors and one with cameras — and a Peugeot with ultrasonic sensors. The Tesla with cameras did not provide consistent results, and the parking assist was deemed "not sufficiently functional."

Cameras vs. Sensors

The transition from ultrasonic sensors to cameras for Tesla's assist systems was intended to reduce costs and simplify hardware. However, cameras, when used independently, have limited sensing capabilities, especially in conditions like low light, heavy rain, or direct glare. Multi-sensor setups, like those used by many competitors, offer more robust obstacle detection and all-weather performance.

The "Vision" system relies heavily on neural networks to interpret camera data. While advanced, these systems can still misinterpret reflections, shadows, or odd visual patterns, leading to false positives or missed obstacles—issues less common in multi-sensor setups. Additionally, the transition to a camera-only approach required significant software rewrites, and some expert reviews suggest that Tesla's parking assist features—particularly in tight spaces—are currently less reliable.

Tesla's camera-based system faces more challenges in parking compared to competitors because it lacks the complementary sensing (ultrasonic, radar, or LiDAR) that provides redundancy and reliability. This makes Tesla's system more prone to errors in challenging conditions, such as parking in tight spaces or detecting small obstacles, where competitors' multi-sensor systems perform more consistently.

Sources: ntv.de, as, [1, 2, 4, 5] -Available on request.

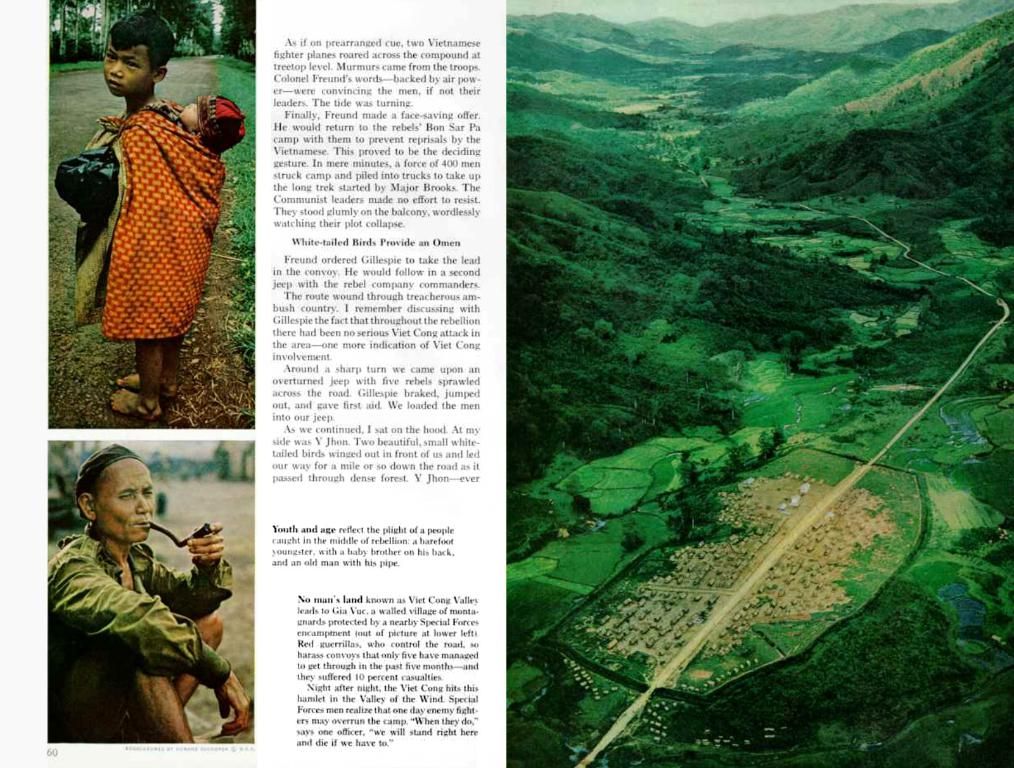

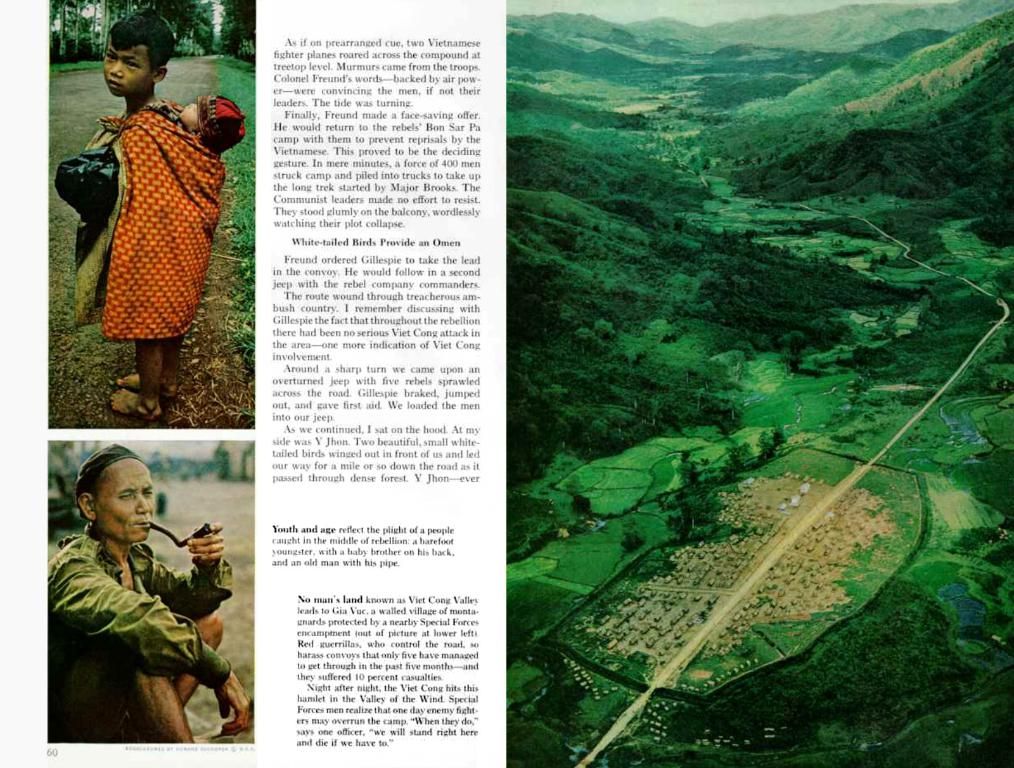

- Despite Tesla's decision to use cameras for their assist systems instead of sensors, like many competitors, the company's "Vision" system faces more issues, especially in parking, due to its increased susceptibility to errors, as noted in expert reports purchased by German courts and published by "Der Spiegel."

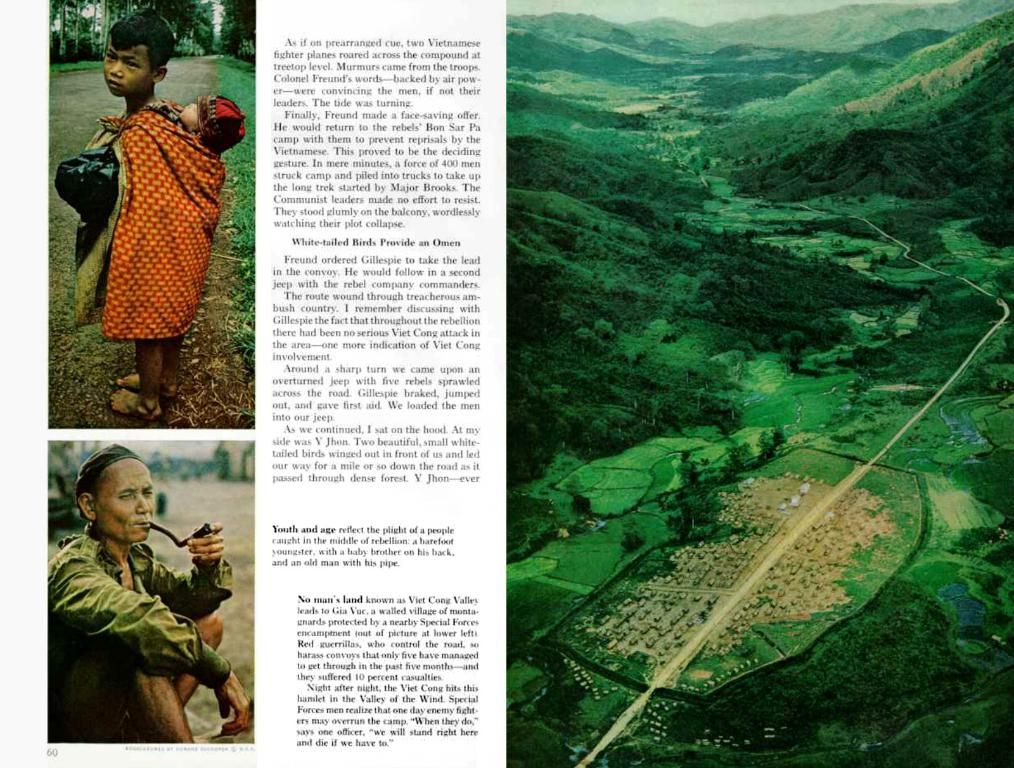

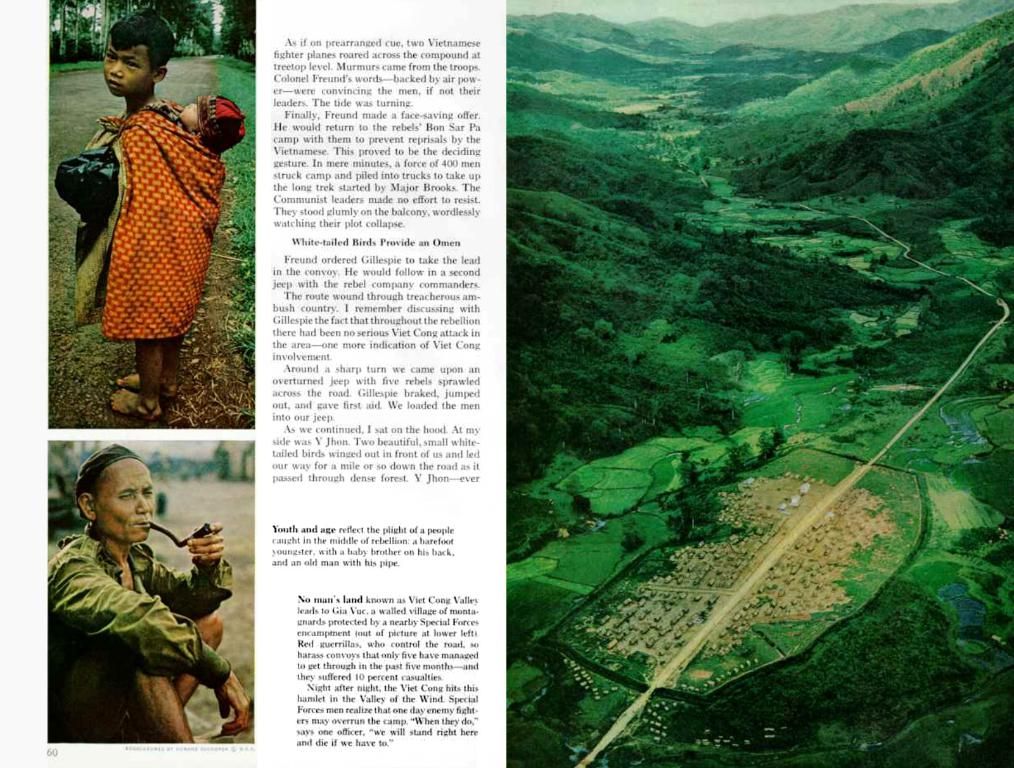

- In the automotive industry, finance, and transportation sectors, multi-sensor systems are prevalent because they offer more robust obstacle detection and all-weather performance compared to camera-based systems, such as Tesla's "Vision" system, which is prone to false positives or missed obstacles due to its reliance on neural networks.

- A change in strategy from ultrasonic sensors to cameras for Tesla's assist systems was meant to cut costs and simplify hardware; however, this decision has resulted in a potential disadvantage for Tesla in terms of parking assist functionality, as the lack of complementary sensing technology, such as ultrasonic, radar, or LiDAR, increases the system's vulnerability to errors in challenging conditions.