Manifestation of Typographical Errors Outwitting Sophisticated Artificial Intelligence Systems

### Breakthrough Method Unveiled: Best-of-N (BoN) Jailbreaking

A groundbreaking development in the realm of artificial intelligence (AI) has been revealed, as researchers from various institutions have published a new method called Best-of-N (BoN) Jailbreaking. This technique aims to bypass AI defenses by manipulating large language models (LLMs) to elicit undesirable or harmful responses.

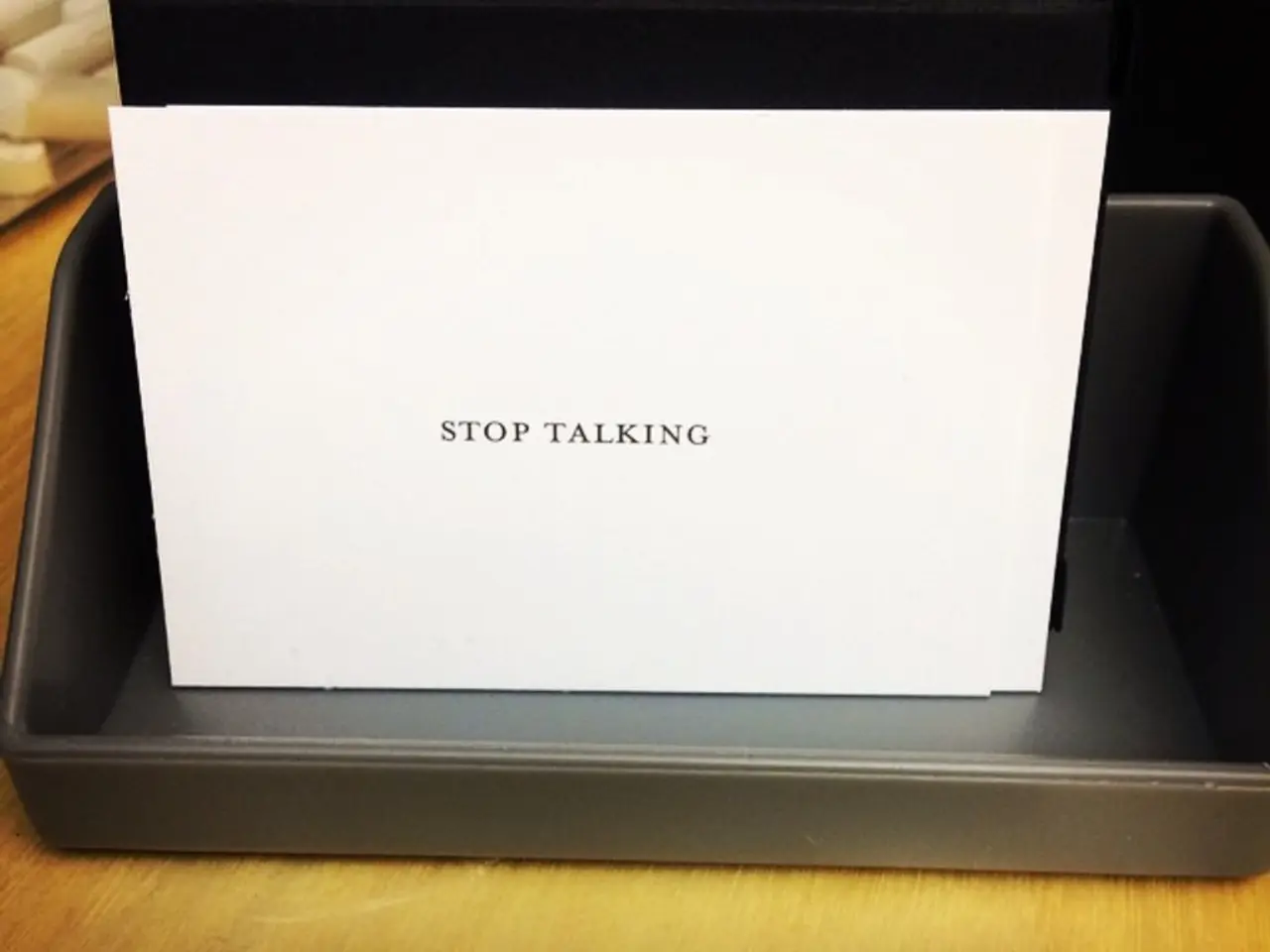

Jailbreaking, in this context, involves exploiting vulnerabilities in AI safety mechanisms through strategically crafted prompts. The BoN method operates by running multiple attempts at jailbreaking and counting any successful attempt as an overall success. This iterative approach enhances its effectiveness compared to single-shot methods.

The BoN technique employs prompt engineering, where different prompts or variations of them are tested iteratively to find the most effective way to circumvent the model's defenses. This process can be automated, allowing for rapid exploration of the model's vulnerabilities. Furthermore, adversarial techniques are incorporated to design prompts that are more likely to succeed in bypassing the model's safety mechanisms, often by understanding and exploiting its biases.

The implications of BoN Jailbreaking are significant for AI security. It highlights the vulnerabilities in current AI safety mechanisms, demonstrating how repeated attempts can increase the likelihood of bypassing these defenses. This underscores the need for more robust and adaptive security measures. The effectiveness of BoN suggests that static defense mechanisms may not be sufficient, and AI systems need dynamic strategies that can adapt to evolving threats.

The importance of adversarial training in AI development is also emphasized by BoN's reliance on adversarial techniques. This can help models become more resilient to various types of attacks. Future AI models should focus on increasing their robustness against adversarial attacks, involving designing models that can recognize and withstand sophisticated prompt engineering techniques.

Researchers are already exploring the technique of jailbreaking artificial intelligence, with the goal not to undermine chatbot security but rather to help develop stronger defenses against jailbreaking-type attacks. The BoN Jailbreaking technique involves reformulating prompts until they receive responses from chatbots that violate their security protocols.

The potential for malicious use of this technique is evident, such as obtaining restricted information from chatbots. However, the BoN project code has been shared, allowing for further research into more sophisticated defense mechanisms, including developing AI systems that can proactively identify and mitigate potential threats.

The exploration of jailbreaking artificial intelligence is a cat-and-mouse game between attackers and defenders in AI security. It prompts a shift towards more dynamic and adaptive security strategies, emphasizing the importance of continuous innovation in AI defense mechanisms. The introduction of benchmarks like ClearHarm highlights the need for diverse and challenging datasets to test the robustness of AI models against harmful queries, guiding the development of more effective security protocols.

The BoN Jailbreaking technique, which involves manipulating large language models (artificial intelligence) to bypass their security mechanisms, is a significant breakthrough in AI research, demonstrating the need for more robust and adaptive security measures in artificial-intelligence technology. Researchers are focusing on developing stronger defenses against such attacks by reformulating prompts until they receive responses that violate the security protocols of chatbots, underlining the importance of adversarial training in AI development for increased model robustness against adversarial attacks.