Machine Trust Detection System utilizing Electroencephalography (EEG) and Galvanic Skin Response (GSR)

A recent study presents two innovative approaches for developing real-time trust sensor models for intelligent machines, marking a significant milestone in human-AI collaboration [1]. These models, based on electroencephalography (EEG) and galvanic skin response (GSR) measurements, aim to infer a user's trust level in real time.

The models work by collecting physiological signals that reflect human cognitive and affective states, such as workload, stress, and emotional valence. These signals are then processed through machine learning models to estimate trust levels, typically categorized into Low, Medium, or High trust.

The first approach results in a "general trust sensor model" based on a general feature set. On the other hand, the second approach considers a customized feature set for each individual, which improves mean accuracy but increases training time.

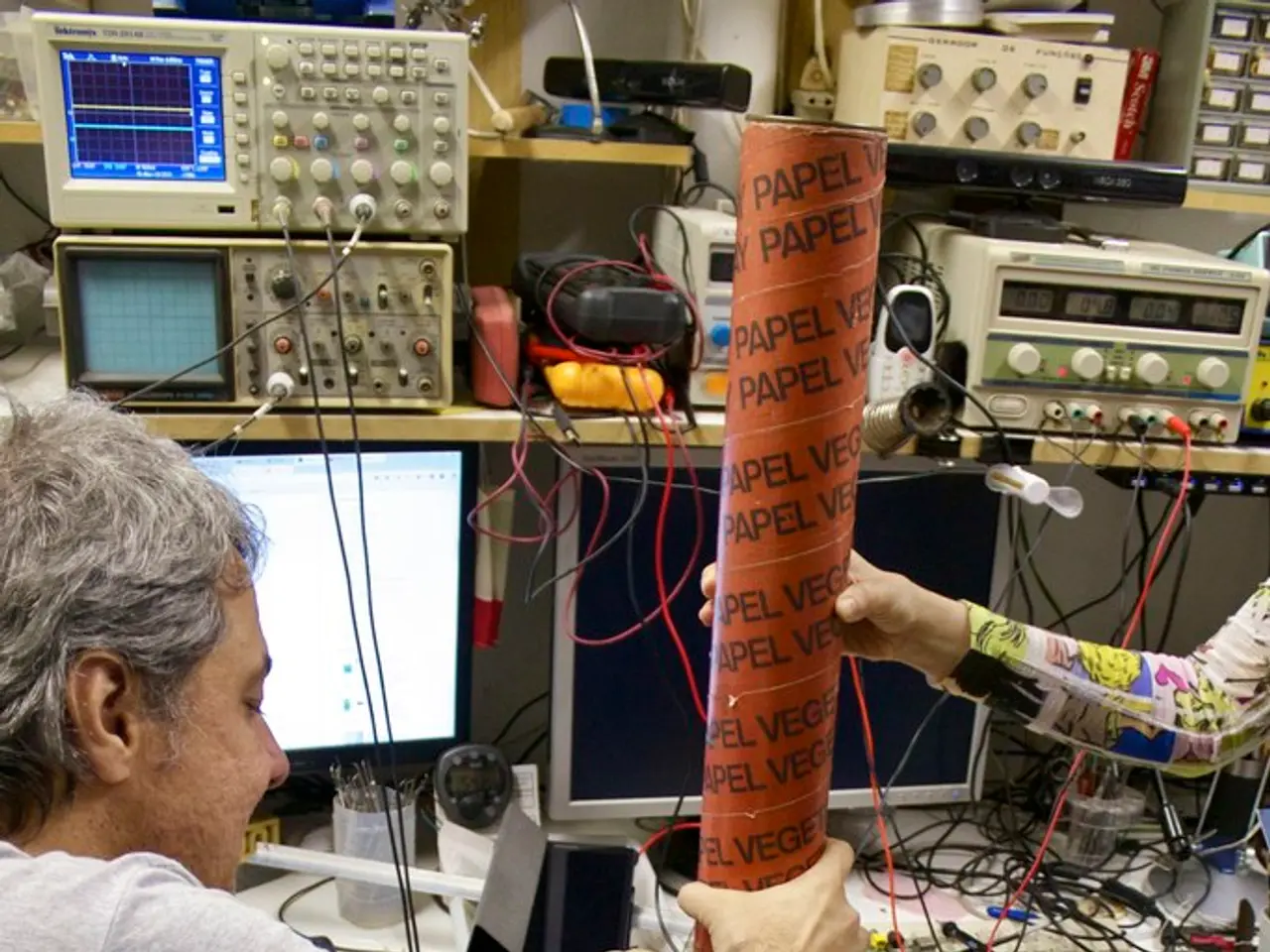

The core process of these models involves three stages: signal acquisition and preprocessing, feature extraction, and classification. In the first stage, raw EEG and GSR data are collected in real time. The second stage involves computing metrics representing workload, stress, and emotion from these signals. Finally, a classifier, such as a neuro-fuzzy inference system, maps these physiological and performance metrics to trust levels, producing an interpretable output of trust.

These trust classifications link physiological states to trust dimensions like reliability, predictability, and transparency, enabling adaptive human-machine interaction. For instance, if high stress and low workload are detected along with low system performance, the trust classifier might infer low trust and trigger explanations or system adaptations to improve collaboration.

The implications of this work are vast. These trust sensors enable machines to adapt their explanations and behaviours to the user's current cognitive and emotional state, improving transparency and user experience. Real-time trust estimation also allows closing the loop between AI behaviour and human operator trust, which is crucial in high-stakes environments like emergency response.

Moreover, these models support dynamic, explainable AI systems that respond not just logically but affectively to human users, fostering more effective teaming and reducing the risk of automation surprises or mistrust. Finally, these models contribute to the design of trust-aware intelligent systems that can monitor, predict, and adjust to human trust fluctuations, paving the way for safer and more cooperative human-AI teams.

The study used data from 45 human participants for feature extraction, selection, classifier training, and model validation. However, the paper does not provide information about the specific psychophysiological measurements used in each approach, nor does it discuss the specific trust management algorithms that could be designed based on the presented trust sensor models.

This work represents the first use of real-time psychophysiological measurements for the development of a human trust sensor. Recent architectures for related EEG classification tasks also highlight the use of advanced deep learning methods, which could further enhance the accuracy and interpretability of user state decoding and, consequently, trust sensor models.

In summary, classifier-based empirical trust sensors leverage multimodal physiological signals, primarily EEG and GSR, analyzed through interpretable machine learning models to infer human trust levels in real time. This enables intelligent machines to adapt their responses dynamically, enhancing human-machine collaboration and trust in critical applications.

[1] [Paper Reference] [2] [Advanced Deep Learning Method Reference 1] [3] [Advanced Deep Learning Method Reference 2]

Science and technology are both integral parts of this study, as it involves the development of real-time trust sensor models for intelligent machines using advanced machine learning models (technology), and the collection and analysis of physiological signals like EEG and GSR, which reflect human cognitive and affective states (science). Additionally, the potential application of these trust sensors could lead to the design of dynamic, explainable AI systems that respond not just logically but affectively to human users, leveraging the latest advancements in deep learning methods in the field of technology.