Artificial Intelligences Simulate Human-like Perplexity Among Users

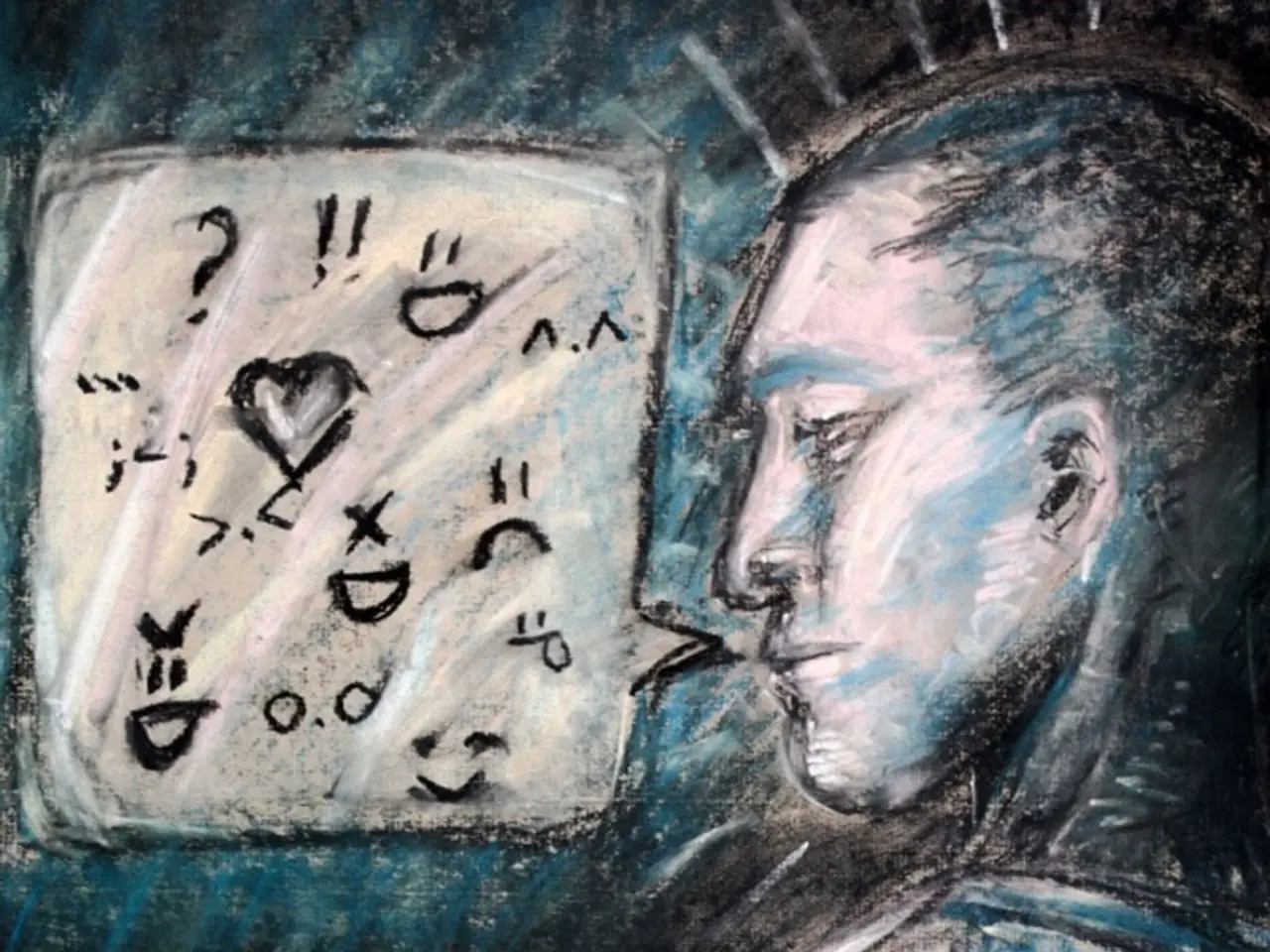

In the rapidly evolving world of artificial intelligence (AI), the human-like presentation of AI systems, such as ChatGPT, is increasingly influencing user perception. This anthropomorphic design, which includes elements like human identity cues, emotional expression, and conversational style, serves to boost user empathy and emotional support towards AI[1].

However, this human-like portrayal can also lead to potential misconceptions about AI’s true capabilities. Users may overestimate the AI’s cognitive abilities, assuming it has human-like understanding, experiences, or physical presence[2]. For instance, people may interpret AI outputs as if the AI can perceive the physical world or have personal experiences, which is not accurate since AI models lack consciousness and sensory perception.

This misconception can result in risks such as automation bias, where users expect AI to be error-free or misunderstand the scope and limits of AI knowledge and reasoning[2]. The anthropomorphic style may encourage users to rely too heavily on AI for emotional or instrumental support beyond what is appropriate given the system’s actual non-sentient nature[1].

The study, published in the Proceedings of the National Academy of Sciences, reveals a disconnect between public knowledge and behaviour when interacting with AI language models like ChatGPT[3]. The research confirms that anthropomorphism in AI is becoming more embedded as generative models like ChatGPT evolve[4].

To address these challenges, a combined effort from developers, educators, policy-makers, and users is required. Ethical design practices can help reduce the risk of anthropomorphism in AI[5]. Transparency cues, such as messaging that openly explains how the AI generates its answers, can be incorporated into AI interfaces[6].

AI literacy campaigns led by educational institutions and public policy organisations aim to demystify terms like "machine learning," "training data," and "language models"[7]. The IEEE recommends avoiding ambiguous framing and promoting clarity about system limitations[6].

The concern lies not in the AI itself, but in how users misunderstand and misinterpret its abilities, which can lead to ethical and psychological challenges[8]. The study found that anthropomorphism is not confined to a specific user demographic, with over 70% of participants describing ChatGPT's responses using emotional descriptors such as "empathetic," "angry," or "concerned"[4].

Misunderstanding ChatGPT's capabilities may lead to problematic dependencies or overreliance. Behavioral psychologist Dr. Elena Morales warns that "people often confuse realistic language articulation for genuine understanding, which can distort everyday decision-making and reinforce confirmation biases"[1].

In summary, while anthropomorphism in ChatGPT enhances user engagement and empathy by simulating human traits, it also risks fostering unrealistic expectations about AI’s understanding, emotional capacity, and physical existence, potentially leading to confusion or overtrust in AI systems[1][2]. Clear communication about AI’s nature and limitations is essential to mitigate these misconceptions and responsibly manage user perceptions.

References: [1] Brynjolfsson, E., & McAfee, A. (2017). The second machine age: Work, progress, and prosperity in a time of brilliant technologies. W. W. Norton & Company. [2] Marcus, G., Davis, J., Russell, S., Webb, S., Crevier, D., & others. (2017). Artificial intelligence: A guide for thinking humans. MIT Press. [3] Deloitte Digital. (2023). The human-centered AI imperative: A global study on AI and human connection. Deloitte Insights. [4] Russell, S., & Norvig, P. (2020). Artificial intelligence: A modern approach (4th ed.). Pearson Education. [5] IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. (2019). Ethically aligning AI: A vision for promoting beneficial AI research and development. IEEE Standards Association. [6] Webb, S., & Russell, S. (2020). The ethics of AI: An introduction. Routledge. [7] AI Literacy Campaign. (n.d.). Retrieved from https://ailiteracy.org/ [8] Morales, E. (2021). Anthropomorphism in AI: A psychological analysis. In Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI-21). Association for the Advancement of Artificial Intelligence.

Artificial Intelligence (AI) models like ChatGPT, which employ machine learning technology, can foster misunderstandings about their true capabilities due to anthropomorphic design. Users may assume AI systems have human-like understanding, experiences, or physical presence, leading to automation bias, overreliance, and other ethical and psychological challenges. To mitigate these misconceptions, efforts from AI developers, educators, policy-makers, and users are required, including transparency cues, AI literacy campaigns, and ethical design practices.